1. Confusion Matrix

Here a typical Confusion matrix after a test with a binary classification problem.

| Ground Truth | |||

|---|---|---|---|

| Positive | Negative | ||

| Predicted | Positive | TP | FP |

| Negative | FN | TN | |

The distribution of the classes might look something like this:

The x-axis is the predicted probability and the y-axis is the count or frequency of observation.

For example, at prob=0.2, the number of observations is 2. The green line shows the threshold used to separate the positive and negative labels for this test.

2. Definitions

2.1 Recall/Sensitivity

Recall/Sensitivity is a measure of the probability that the estimate is Positive given all the samples whose True label is Positive. In short, how many positive samples have been ientified as positive. It is also called the True Positive Rate.

| Ground Truth | |||

|---|---|---|---|

| Positive | Negative | ||

| Predicted | Positive | TP | FP |

| Negative | FN | TN | |

2.2 Precision

Precision is defined as the number of TP over the number of all samples predicted to be positive:

| Ground Truth | |||

|---|---|---|---|

| Positive | Negative | ||

| Predicted | Positive | TP | FP |

| Negative | FN | TN | |

2.3 Specificity:

Specificity, also known as True Negative Rate, is not precision.

| Ground Truth | |||

|---|---|---|---|

| Positive | Negative | ||

| Predicted | Positive | TP | FP |

| Negative | FN | TN | |

3. Metrics to evaluate classification test/model:

3.1 F score

The F-score is defined as:

where is the Precision, is the Recall and $\beta$ takes the common values $\beta=0.5, 1, 2$.

3.2 Precision/Recall curve

The Precision/Recall curve shows the Recall (in y-axis) and Precision (x-axis) for various threshold values / decision boundary. The ideal point is Precision=1 and Recall=1.

3.3 ROC (Receiver Operating Characteristic) Metric

ROC is a visual way of inspecting the performance of a binary classification algorithm. ROC curve is the plot of TPR versus FPR (1-specificity) for different threshold values.

Below is a typical ROC curve for 2 tests where each data point is the sesitivity and specificity when uisng a particular threshold.

Let’s look at several ROC curve shape and what it tells us about the performance of the classifier. The baseline indicates that the classifier is not better than making random guess. The Underperforming model would have data points below the baseline. A good classifier will points above the baseline.

3.3 AUROC: Area under ROC

AUC is useful when comparing different model/tests, as we can select the model with the highest AUC value.

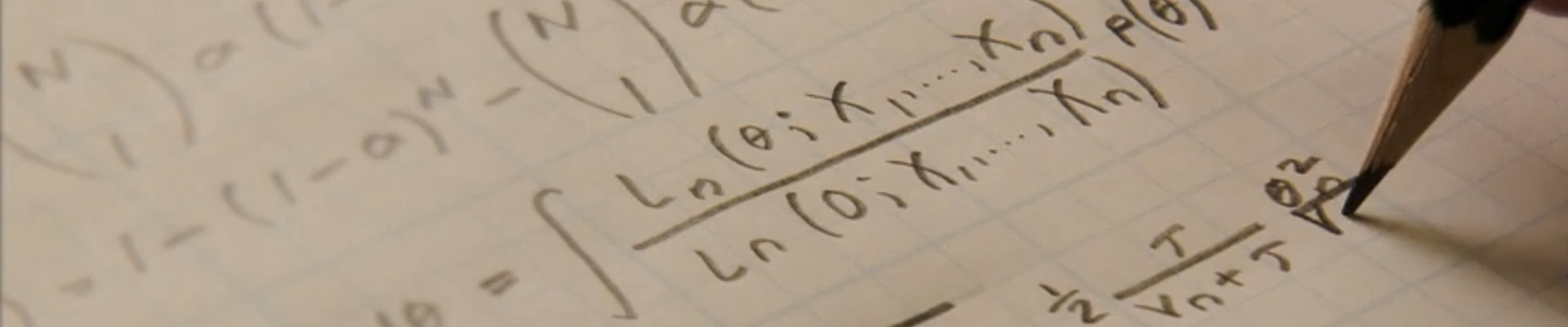

AUROC can be calculated using the trapezoid rule: adding the area from the rapezoids:

4. Some Notes

4.1 Imbalanced dataset

Most problems do not deal with balanced class and ROC becomes less powerful when using imbalanced datasets.

The distribution of the classes might look something like this:

One effective approach to avoid potential issues wit himbalanced datasets is using the early retrival area, where the model performance can be analyze with fewer false positive or small FPR.

4.2 Probability calibration

ROC and AUROC are insensitive to whether the predicted probabilities are properly calibrated to actually represent probabilities of class membership.

4.3 Multi-class problems

ROC curves can be extended to problems with 3 or more classes.

4.4 Choosing Threshold

Choosing a classification threshold is a business decision: minimize FPR or maximize TPR.

5. Precision/Recall Curve

PR is more appropriate if the data class distribution is highly skewed class imbalance.