Then define your chart like this:

The following steps are good only if the NVIdia card is used for GPU acceleration computing only, not for display. My machine has 2 graphic cards: a Rodeon ATI (not built-in) for display and the NVIdia GForce for computing. Before starting, make sure to save all your work.

step0: Download the driver from NVIdia website.**

Make note of the directory where the file is saved (usually it is in the folder Downloads/)

step1. Get into the text-only console (TTY)

step2: Enter login and password

step3 4. kill the current X-server session

step4: 5. Optional: purge all NVidia drivers (there is no need to do that but in case you want a fresh start)

step 5: Enter runlevel 3**

step6: Run the install. Go to the directory where the file NVIDIA∗.run file is saved. If you have 1 install file, type:

It is important to include the option no-opengl--files in the command. If it is not included, the install would overwrite the opengl files used by the Graphic Card for Display. And you would end up with a machine stucked into a login loop.

**8. If some error messages prompt up, dismiss them **

9. Do not update Xconfig

You will be asked if you wish to update Xconfig: select NO. If you choose YES, the NVIdia Card will take over the display work and that will create conflicts with the other graphic card.

Otherwise, replace NVI∗.run by the full name of the file.

10. Start X-server session

11. Exit the TTY mode

1

2

3

4

5

6

7

8

9

10

`"CTRL"` + `"ALT"` + `"F1"`

#Enter login and passwrod

sudo service lightdm stop

sudo apt-get purge nvidia-*

sudo init 3

cd {location_of_nvidia_file}

`sudo sh NVI*.run --no-opengl-files`

{ Follow the instructions }

`sudo service lightdm start`

`"CTRL"` + `"ALT"` + `"F7"`

Voila! You are all set with the installation of the driver!

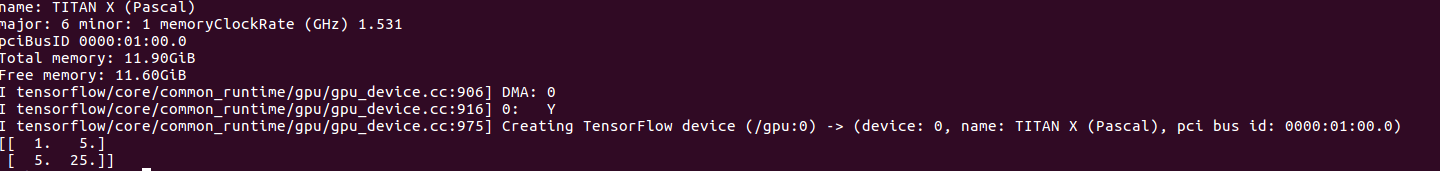

The next and final step is to make sure that TensorFlow detects the GPU. Here is a short script:

The script calculates the matrix multiplication: , where a is a (2,1) and b is a (1,2) matrix.

If the GPU is detected by TF, you should see something like this: